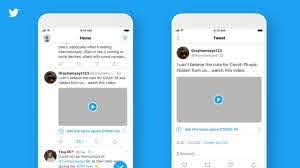

As Twitter continues to add warning labels to tweets that make potentially untrue claims about the results of the US Election, and the voting process, it’s also apparently testing some new warnings which expand on the current pop-ups when users attempt to re-tweet a disputed update.

As you can see in this example, posted by reverse engineering expert Jane Manchun Wong, Twitter is trying out a new warning when people go to Like a disputed tweet. It’s exactly the same as the current warning when you go to retweet the same, except instead of a ‘Quote tweet’ option at the bottom, it has a ‘Like’ option instead.

Twitter has seen significant results from adding more friction to sharing and prompting users to think more about what they’re doing.

Back in June, Twitter added a new pop-up alert on articles that users attempt to retweet without actually opening the article link and reading the post.

Twitter found that these prompts lead to people opening articles 40% more often, a significant increase, showing that even simple reminders can have a significant impact on user behavior.

In the lead up to the US Election, Twitter removed the straight retweet option for all US users, with the prompt opening to a Quote Tweet window instead, another measure to slow down sharing momentum, while as noted, it’s also been working to quickly assign its warning labels to tweets which make false claims about the poll.

Adding a pop-up on Likes could be another way to reduce the spread of misinformation. When a user Likes a tweet, it’s eligible to show up in the feeds of their followers as a recommended discussion, which makes it another potential avenue of distribution.

If such prompts are effective, having these pop-ups on both Retweets and Likes could cause a significant reduction in the spread of disputed claims.

Facebook is also reportedly looking to add more friction to the sharing of political posts, in order to slow the momentum of conspiracy-fueling content, and with many claims still swirling around the official election results, it remains a tense situation and one that Facebook and Twitter, in particular, need to manage. They don’t want to be playing any part in fueling dissent, but they also don’t want to stop people from being able to engage in civic discussion.

Maybe, by adding in more reminders and prompts, it could help Twitter’s team stay on top of the escalating chatter, at least until any official investigations are completed.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.