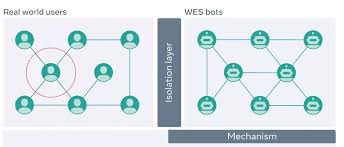

That’s pretty much what Facebook has created – in order to more accurately test for security vulnerabilities and potentially harmful actions, Facebook has developed a new system called WES (Web-Enabled Simulation) which uses bots modeled on human behavior to replicate usage patterns.

This feels… concerning. Robots have their own social network now?

As per Facebook:

“Bots are trained to interact with each other using the same infrastructure as real users, so they can send messages to other bots, comment on bots’ posts or publish their own, or make friend requests to other bots.”

And there are a lot of bots interacting on the WES platform:

“WES is able to automate interactions between thousands or even millions of bots. We are using a combination of online and offline simulation, training bots with anything from simple rules, and supervised machine learning to more sophisticated reinforcement learning.”

That feels a little scary, right? A society of bot profiles chatting amongst themselves. Plotting our demise.

Of course, they’re modeled on human behavior, based on Facebook posts; so really, they’re probably just sharing GIFs and discussing conspiracy theories. But still, there’s something that feels a little off about it.

As Gru says:

Concerns aside, the real aim of the project is, as noted, to test for potential vulnerabilities, in order to detect and address such before they impact real people.

“We can, for example, create realistic AI bots that seek to buy items that aren’t allowed on our platform, like guns or drugs. Because the bot is acting in the actual production version of Facebook, it can conduct searches, visit pages, send messages, and take other actions just as a real person might.”

So the idea of the simulation is not to let the robots develop their own network to engage and interact, but it’s to replicate real user behavior, as close as robot possible, in order to help Facebook improve its systems. Which is far less concerning – but even so, Facebook includes a couple of variations of this line within its post on the project:

“Bots cannot interact with actual Facebook users and their behavior cannot impact the experience of real users on the platform.”

So we’re safe, we’re fine, and it’s fine for robots to have their own Facebook where they can interact with their virtual family and friends. But still, with the advent of AI, one does wonder whether enabling bots to develop an emotional connection is a good thing.

The benefits of the project make sense, but I do want to see what, exactly, the bots discuss on their social network.

You can read more about Facebook’s WES project here.

I do not even understand how I ended up here, but I assumed this publish used to be great